Update (2018-03-22) Since I wrote this document back in 2014,Docker has developed the macvlan networkdriver. That gives you asupported mechanism for direct connectivity to a local layer 2network. I’ve written an article about working with the macvlandriver.

This article discusses four ways to make a Docker container appear ona local network. These are not suggested as practical solutions, butare meant to illustrate some of the underlying network technologyavailable in Linux.

If you were actually going to use one of these solutions as anythingother than a technology demonstration, you might look to the pipework script, which can automate many of these configurations.

- 抓包如下,可以看到centos1-2的源mac地址与上述的mac地址是一致的。 使用IPOP构包模拟hairpin的交换机,模拟从192.168.128.233 发送arp请求192.168.128.222,报文如下:.

- Expected behaviour Faster response loading time Actual behaviour Network connection load response time is well above 1min in most cases Information Diagnostic ID: 8527377B-F84E-4E32-8661-0A677D5A24DF Docker for Mac: 1.12.0-a (Build 11213.

- Sudo ip netns exec netnsdustin ping 10.0.0.21 -c 1 sudo ip netns exec netnsleah ping 10.0.0.11 -c 1 will both fail because our bridge isn’t enabled to forward traffic. Currently bridgehome will receive traffic from vethdustin and vethleah, but all packets that need to then be forwarded to vethleah and vethdustin, respectively, will.

- Docker for Mac の Bridgeモードを考察してみた. はじめに 【 大阪オフィス開設1周年勉強会 】第6回 本番で使うDocker勉強会 in 大阪 2017/06/09 #cmosaka | Developers.IO に参加した際、内輪で Docker の Bridge モードと Host モードの違いは何?.

Goals and Assumptions

The host network configuration only works as expected on Linux systems, beacuase Docker uses a virtual machine under the hood on Mac and Windows, thus the host network in these cases refers to the VM rather than the real host itself. (I have not used a host network on a Windows machine with a Windows based container, so I cannot comment on that.

In the following examples, we have a host with address 10.12.0.76 onthe 10.12.0.0/21 network. We are creating a Docker container that wewant to expose as 10.12.0.117.

I am running Fedora 20 with Docker 1.1.2. This means, in particular,that my utils-linux package is recent enough to include thensenter command. If you don’t have that handy, there is aconvenient Docker recipe to build it for you at jpetazzo/nsenteron GitHub.

A little help along the way

In this article we will often refer to the PID of a docker container.In order to make this convenient, drop the following into a scriptcalled docker-pid, place it somewhere on your PATH, and make itexecutable:

This allows us to conveniently get the PID of a docker container byname or ID:

In a script called docker-ip, place the following:

And now we can get the ip address of a container like this:

Using NAT

This uses the standard Docker network model combined with NAT rules onyour host to redirect inbound traffic to/outbound traffic from theappropriate IP address.

Assign our target address to your host interface:

Start your docker container, using the -p option to bind exposedports to an ip address and port on the host:

With this command, Docker will set up the standard network model:

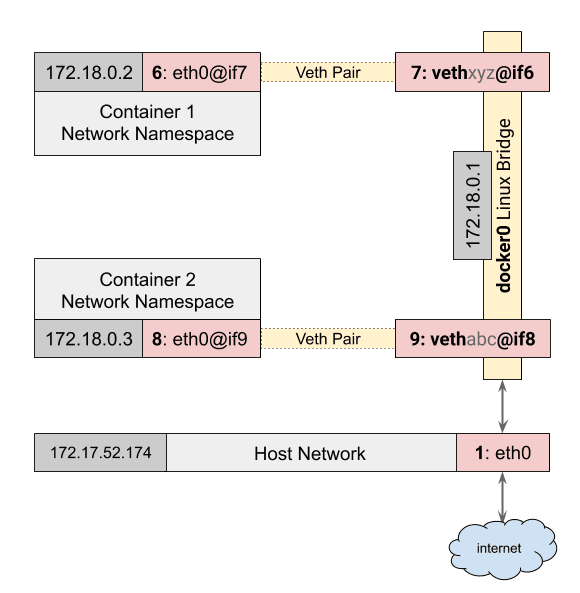

- It will create a veth interface pair.

- Connect one end to the

docker0bridge. - Place the other inside the container namespace as

eth0. - Assign an ip address from the network used by the

docker0bridge.

Because we added -p 10.12.0.117:80:80 to our command line, Dockerwill also create the following rule in the nat table DOCKERchain (which is run from the PREROUTING chain):

This matches traffic TO our target address (-d 10.12.0.117/32) notoriginating on the docker0 bridge (! -i docker0) destined fortcp port 80 (-p tcp -m tcp --dport 80). Matching traffic hasit’s destination set to the address of our docker container (-j DNAT --to-destination 172.17.0.4:80).

From a host elsewhere on the network, we can now access the web serverat our selected ip address:

If our container were to initiate a network connection with anothersystem, that connection would appear to originate with ip address ofour host. We can fix that my adding a SNAT rule to thePOSTROUTING chain to modify the source address:

Note here the use of -I POSTROUTING, which places the rule at thetop of the POSTROUTING chain. This is necessary because, bydefault, Docker has already added the following rule to the top of thePOSTROUTING chain:

Because this MASQUERADE rule matches traffic from any container, weneed to place our rule earlier in the POSTROUTING chain for it tohave any affect.

With these rules in place, traffic to 10.12.0.117 (port 80) isdirected to our web container, and traffic originating in the webcontainer will appear to come from 10.12.0.117.

With Linux Bridge devices

The previous example was relatively easy to configure, but has a fewshortcomings. If you need to configure an interface using DHCP, or ifyou have an application that needs to be on the same layer 2 broadcastdomain as other devices on your network, NAT rules aren’t going towork out.

This solution uses a Linux bridge device, created using brctl, toconnect your containers directly to a physical network.

Start by creating a new bridge device. In this example, we’ll createone called br-em1:

We’re going to add em1 to this bridge, and move the ip address fromem1 onto the bridge.

WARNING: This is not something you should do remotely, especiallyfor the first time, and making this persistent varies fromdistribution to distribution, so this will not be a persistentconfiguration.

Look at the configuration of interface em1 and note the existing ipaddress:

Look at your current routes and note the default route:

Now, add this device to your bridge:

Configure the bridge with the address that used to belong toem1:

And move the default route to the bridge:

If you were doing this remotely; you would do this all in one linelike this:

At this point, verify that you still have network connectivity:

Start up the web container:

This will give us the normal eth0 interface inside the container,but we’re going to ignore that and add a new one.

Create a veth interface pair:

Add the web-ext link to the br-eth0 bridge:

And add the web-int interface to the namespace of the container:

Next, we’ll use the nsenter command (part of the util-linux package) to run some commands inside the web container. Start by bringing up the link inside the container:

Assign our target ip address to the interface:

And set a new default route inside the container:

Again, we can verify from another host that the web server isavailable at 10.12.0.117:

Note that in this example we have assigned a static ip address, but wecould just have easily acquired an address using DHCP. After running:

We can run:

With Open vSwitch Bridge devices

This process is largely the same as in the previous example, but weuse Open vSwitch instead of the legacy Linux bridge devices.These instructions assume that you have already installed and startedOpen vSwitch on your system.

Create an OVS bridge using the ovs-vsctl command:

And add your external interface:

And then proceed as in the previous set of instructions.

The equivalent all-in-one command is:

Once that completes, your openvswitch configuration should look likethis:

To add the web-ext interface to the bridge, run:

Instead of:

WARNING: The Open vSwitch configuration persists between reboots.This means that when your system comes back up, em1 will still be amember of br-em, which will probably result in no networkconnectivity for your host.

Before rebooting your system, make sure to ovs-vsctl del-port br-em1 em1.

With macvlan devices

This process is similar to the previous two, but instead of using abridge device we will create a macvlan, which is a virtual networkinterface associated with a physical interface. Unlike the previoustwo solutions, this does not require any interruption to your primarynetwork interface.

Start by creating a docker container as in the previous examples:

Create a macvlan interface associated with your physical interface:

This creates a new macvlan interface named em1p0 (but you canname it anything you want) associated with interface em1. We aresetting it up in bridge mode, which permits all macvlan interfacesto communicate with eachother.

Add this interface to the container’s network namespace:

Nets Docker For Mac Os

Bring up the link:

And configure the ip address and routing:

And demonstrate that from another host the web server is availableat 10.12.0.117:

Nets Docker For Mac Download

But note that if you were to try the same thing on the host, you wouldget:

The host is unable to communicate with macvlan devices via theprimary interface. You can create anothermacvlan interface onthe host, give it an address on the appropriate network, and then setup routes to your containers via that interface:

Back on the server side, we can do an inspect of the docker container as well as the network again to see some more info. If you are new to Docker, creating a container without a name causes it to have a randomly generated and usually hilarious name, in our case here distracted_lamarr.

So a lot of good info here. I’ve highlighted some things which you can verify, like the IP and MAC address which were automatically assigned. The docker inspect command can help you find a ton of great info.

Notice one thing I highlighted which is the SandboxKey. This will answer our question of where the network devices went and how they are tied together. Linux uses network namespaces to essentially segment things out logically. So your container uses a different network namespace (which Docker calls a sandbox) than the server, hence we don’t see the interfaces of one in the other. And in fact, veth interfaces are designed to span separate network namespaces.

How can we see the network namespace, or even know that it is a real thing? Great question! It is actually not very straightforward. Normally network namespaces are defined in /var/run/netns which is where the command to view them (ip netns list) looks for them. Unfortunately as you can see, Docker stores them in a separate place (/var/run/docker/netns). In my case because this is just a dummy test system, I can fix this easily by softlinking /var/run/netns to the docker location. Personally I wouldn’t do this in a real system but this is what testing is for!

Here after I’ve linked the network namespace to the proper location, my command returns something. We can see the network namespace id is 0 which also happens to be the link-netnsid value from ip addr show. We can also see that the name is 3be322af84fc which matches up properly from the docker inspect information.

Now that we know the namespace and have the linking, I can actually exec against that namespace and verify that we are looking at the right thing.

Recall that this is the same output from the container, but this is executed from the server using the ip netns exec command.

Finally (almost done!) I’ll show some diagrams and show you a few more interesting things before closing out.